In 1958, mathematician John von Neumann made a controversial prediction: because of the major differences between computers and the human brain, it would be nearly impossible for technology to ever simulate the brain (1). 55 years later, in 2009, neuroscientist Henry Markam stood up on a TED stage and made a contrary claim. Given ten years with enough funding and resources, he would be able to create an exact computer model of the human brain, down to each individual neuron. By extension, it would be as intelligent as humans themselves (2).

Both of these scientists were game changers in artificial intelligence, or AI. Generally, computers are programmed to perform tasks far more quickly and accurately than the human brain. However, they struggle with creativity — adapting to new situations and finding novel insights that they weren’t explicitly told about. Thus, the field of AI began with the belief that by modeling the human brain, computers could develop intelligence comparable to that of humans. Many techniques have been adopted and discarded over the years, but this general premise has remained the same. The most prominent technique that has arisen is the artificial neural network, or ANN, which is an attempt to mimic the neural network of the brain.

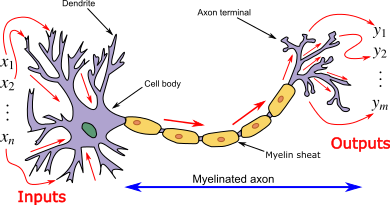

Neuroscientists in the 1950s hadn’t yet discovered the parts of the brain or the inner workings of memory; however, they understood the structure of the neural network. Each neuron receives signals from other neurons at branched extensions called dendrites. Once enough of these signals build up, the neuron sends its own signal out through its axon terminal. The brain is made up of a massive web of connected neurons, which combine to produce our cognition (3).

ANNs attempt to mimic this structure. Each “neuron” performs calculations: taking in inputs, adjusting them using weights, and summing them to produce its own output, which becomes an input to other neurons. The brain and ANNs learn similarly, by strengthening or weakening the connections between biological neurons and, correspondingly, adjusting the weights in ANNs.

The parts of a biological neuron (4)

With growing computational power, ANNs have had major success, and can be used to recognize objects and caption images with high accuracy. They model the brain’s layer of neurons, where the first layer senses every pixel in the image, the second layer senses edges and shapes, and each subsequent layer adds further complexity, eventually recognizing an image (5).

However, as von Neumann theorized, there are fundamental differences between the two systems. The brain receives a flood of information with a temporal component, as every signal that the dendrite receives lasts for only thousandths of a second. A neuron must receive many inputs in a certain window in order to fire. ANNs, on the other hand, use only one piece of data, and perform calculations on that single instance (1). As a result, computers have difficulty analyzing a constantly changing environment like that of our world, while our brains operate just fine.

This appears counterintuitive. With technological advances, it appears that the computer should far surpass the human brain in processing speed. A computer can perform 10 billion simple operations per second, whereas a neuron can fire at most 1000 times per second. This may suggest that the human brain should be no faster than an ancient computer. However, humans are able to perform split second decisions and make intelligent reactions. This is because the computer performs most operations serially — one operation after another — while the brain works in parallel. A neuron doesn’t have to wait for a neuron in another part of the brain to fire; instead, the entirety of the brain operates at once (6).

Ultimately, Markam’s attempt to simulate the human brain failed. He found that even modeling the 302 neurons in a roundworm was difficult, and the 86 billion neurons in the human brain would be near impossible. The basic structure of the brain has given us inspiration for successful computer algorithms. Perhaps as we learn more about the workings of the brain or create radically new technologies, this venture will be successful. However, there are limitations on the extent to which we can model a fundamentally different system.

References:

1) von Neumann, John. The Computer & The Brain. Yale University Press, 1958.

2) Markam, Henry. (2009, July), A brain in a supercomputer [Video file]. Retrieved from https://www.ted.com/talks/henry_markram_a_brain_in_a_supercomputer?language=en

3) Queensland Brain Institute. “How do neurons work?” The University of Queensland, 9 November 2017, https://qbi.uq.edu.au/brain-basics/brain/brain-physiology/how-do-neurons-work#:~:text=Nerve%20impulses%20are%20the%20basic,other%20neurons%20(Fig%201).

4) Vu-Quoc, L. (2018). Neuron and myelinated axon, with signal flow from inputs at dendrites to outputs at axon terminals [Diagram]. Wikipedia. https://en.wikipedia.org/wiki/Biological_neuron_model

5) Clancy, Kelly. “Is the Brain a Useful Model for Artificial Intelligence?” Wired, 19 May 2020, https://www.wired.com/story/brain-model-artificial-intelligence/

6) Luo, Liqun. “Why is the Human Brain so Efficient?” Nautilus, 12 April 2018, http://nautil.us/issue/59/connections/why-is-the-human-brain-so-efficient#:~:text=Thus%20both%20in%20terms%20of,times%20slower%20than%20the%20computer.