Is there something in my teeth? Can they hear what I am thinking? A world where someone can read your mind seems scary. However, for those who are speech impaired, someone, or rather, something that can read their minds might be a world that cannot come soon enough.

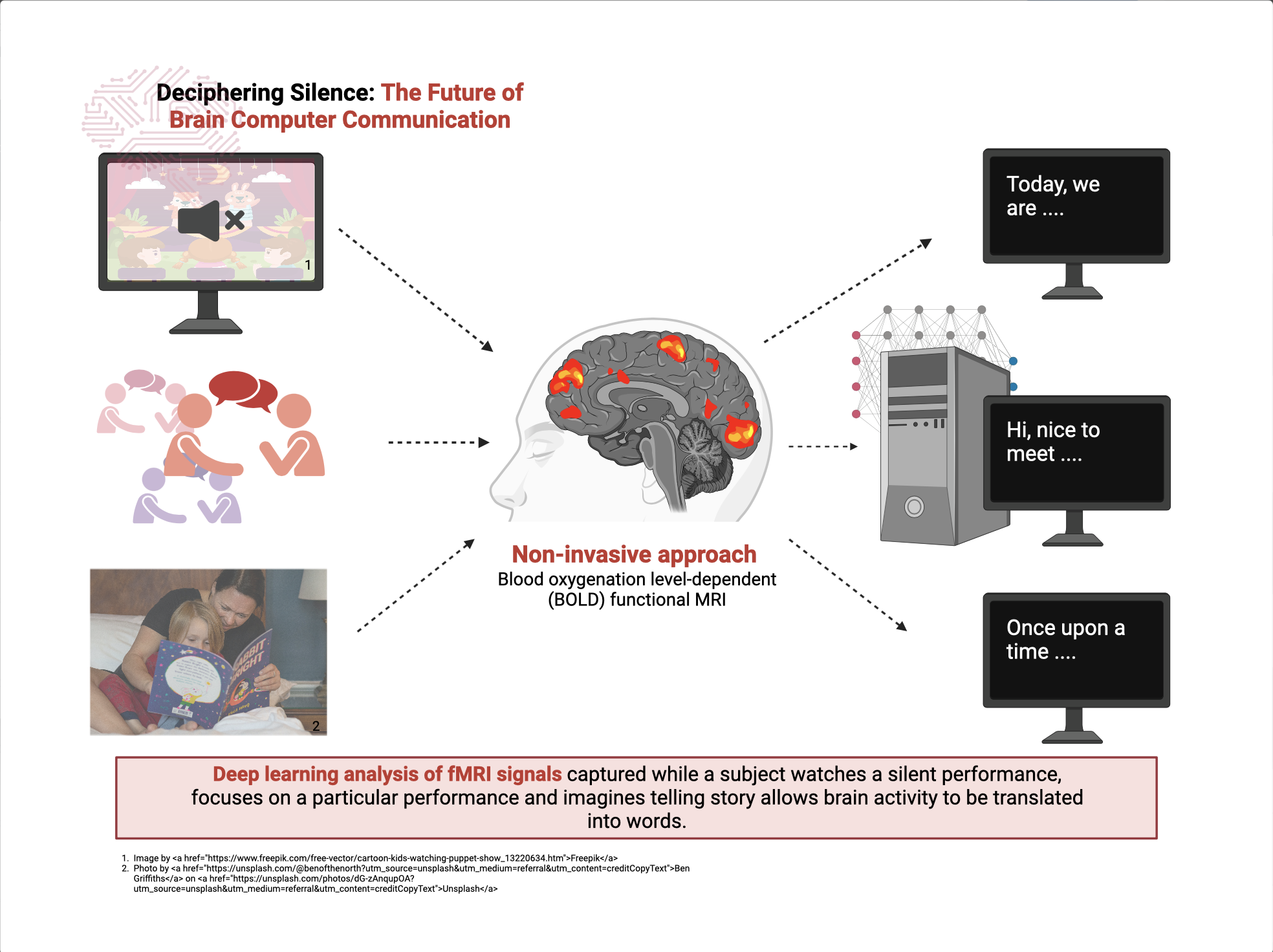

To rehabilitate the speech impaired, scientists have been working on a solution that allows continuous language (speech) to be decoded from brain activity using a computer. The technology, branched under the term brain-computer interfaces (BCIs), uses deep learning to achieve this remarkable feat. Deep learning mimics the human brain’s learning patterns, analyzing what best features of brain recordings can represent speech. Coupled with a secondary algorithm, those learned patterns are decoded into words that can be read from a computer screen.

However, the most successful implementations of this technology require neurosurgery to implant recording electrodes (Bocquelet et al., 2016). This technology can translate brain activity into bionic arm control, phone navigation, and other valuable functions. However, with the electrode implant surgery, the technology becomes limited to the single function of speech extraction. A successful, non-invasive approach that offers rehabilitation and can be applied simultaneously to augmentative applications is long-overdue.

Researchers at the University of Texas have come up with a promising solution. In place of implanted electrodes, functional Magnetic Resonance Imaging (fMRI) is used to record brain activity (Tang et al., 2023). Their study aims to test a non-invasive decoder’s performance and better understand how the brain processes perceived and imagined language (Tang et al., 2023).

Seven participants underwent a series of language tasks designed to test this aim. They observed animated silent films and imagined narrating story segments, and a custom-built decoder translated the fMRI activity into words.

Those imagined narrations were able to be accurately predicted by the decoder (Tang et al., 2023). This success elucidated that our brains process the stories we tell ourselves in the same way as with spoken words. When subjects were immersed in silent films, the decoder's predictions were intertwined with their plot, suggesting that our brains might be crafting a silent commentary.

Even more intriguing, in a world that's always “on”—always noisy—our brains have an uncanny ability to tune out the static. In a layered multi-speaker experiment, subjects attended to one speaker at a time (Tang et al., 2023). Amidst the cacophony, subjects could zero in on a chosen narrative, illustrating our brain's adaptive prowess.

Interestingly, the decoder's performance wavered when subjects tried to resist decoding. A final experiment tasked subjects with listening to a story segment while simultaneously trying to do a disruptive task, such as counting backward (Tang et al., 2023). The observed decrease in decoder performance suggests that controlling our thoughts is self-governed.

Experts Professor Rainer Goebel and Professor Tara Spires-Jones agree that the BCI's non-invasive nature is its highlight, but it is not exempt from complications (Expert Reaction to Study Describing a Language Decoder Reconstructing Meaning from Brain Scans | Science Media Centre, n.d.). Its accuracy, especially when diving into the depths of inner speech, is still being refined. Moreover, as we try to implement this technology, the ethical questions must be considered, especially concerning the privacy of our thoughts (Expert Reaction to Study Describing a Language Decoder Reconstructing Meaning from Brain Scans | Science Media Centre, n.d.).

Nonetheless, a future with BCI technology opens a myriad of possibilities. The world of entertainment, education, and work-life as we know it could drastically be improved. Imagine a life in which thoughts can manifest into a picture drawn on a computer screen without lifting a finger. Most important, however, is the safer and non-invasive rehabilitative possibilities for those unable to communicate verbally. BCI technology envisages hope for their thoughts, dreams, and emotions, promising a voice to those who have long been silenced.

References

Bocquelet, F., Hueber, T., Girin, L., Chabardès, S., & Yvert, B. (2016). Key considerations in designing a speech brain-computer interface. Journal of Physiology-Paris, 110(4, Part A), 392–401. https://doi.org/10.1016/j.jphysparis.2017.07.002

Expert reaction to study describing a language decoder reconstructing meaning from brain scans | Science Media Centre. (n.d.). Retrieved August 2, 2023, from https://www.sciencemediacentre.org/expert-reaction-to-study-describing-a-language-decoder-reconstructing-meaning-from-brain-scans/

Saha, S., Mamun, K. A., Ahmed, K., Mostafa, R., Naik, G. R., Darvishi, S., Khandoker, A. H., & Baumert, M. (2021). Progress in Brain Computer Interface: Challenges and Opportunities. Frontiers in Systems Neuroscience, 15.https://www.frontiersin.org/articles/10.3389/fnsys.2021.578875

Tang, J., LeBel, A., Jain, S., & Huth, A. G. (2023). Semantic reconstruction of continuous language from non-invasive brain recordings. Nature Neuroscience, 26(5), Article 5. https://doi.org/10.1038/s41593-023-01304-9

The Future of AI: How AI Is Changing the World | Built In. (n.d.). Retrieved August 11, 2023, from https://builtin.com/artificial-intelligence/artificial-intelligence-future