As artificial intelligence (AI) generated content floods the digital world, the very foundation upon which large-language models are built is at risk of becoming tainted from its own doing). Large Language Models (LLMs) such as ChatGPT or Bard are Machine Learning models that operate specifically on human language. Drawing from the vast pool of text available on the internet, LLMs rely on a gargantuan collection of human-generated text to predict the next token in a sentence. These models play the guessing game of until a full sentence has been formed. For instance, given the sentence “the car was”, the model may predict the next token of “green”, “fast”, or “new”.

However, as AI-generated content becomes prolific on the Internet, if we are unable to differentiate and isolate this text, future corpora – the text database drawn on by natural language processing models – will utilize these AI-generated outputs (Collins). The more AI-generated content used in the prediction corpora for these models, the further a model’s output will stray from the original human-written truth. Due to this, as well as additional concerns in education and copyright, it is necessary to have a “watermark” on the output of a LLM, marking that a text was AI-generated (Lancaster 5).

Though there are several tactics to include a secret “watermark” within these text outputs, one main approach utilizes verification with a model’s seeded output (Lancaster 3). This technique relies on the usage of a randomized number as a seed in the LLM’s output generation, following the principle that an identical input should produce an identical output given that the seed is the same (Lancaster 3). This seed is used as a basis to select the next output token, favoring the seeded word given multiple options. For example, without seeding given the sentence “the car was” with the equal options of “green” and “red” for the next token, each color would be chosen half the time. However, with this seed, the seeded token will be selected every single time.

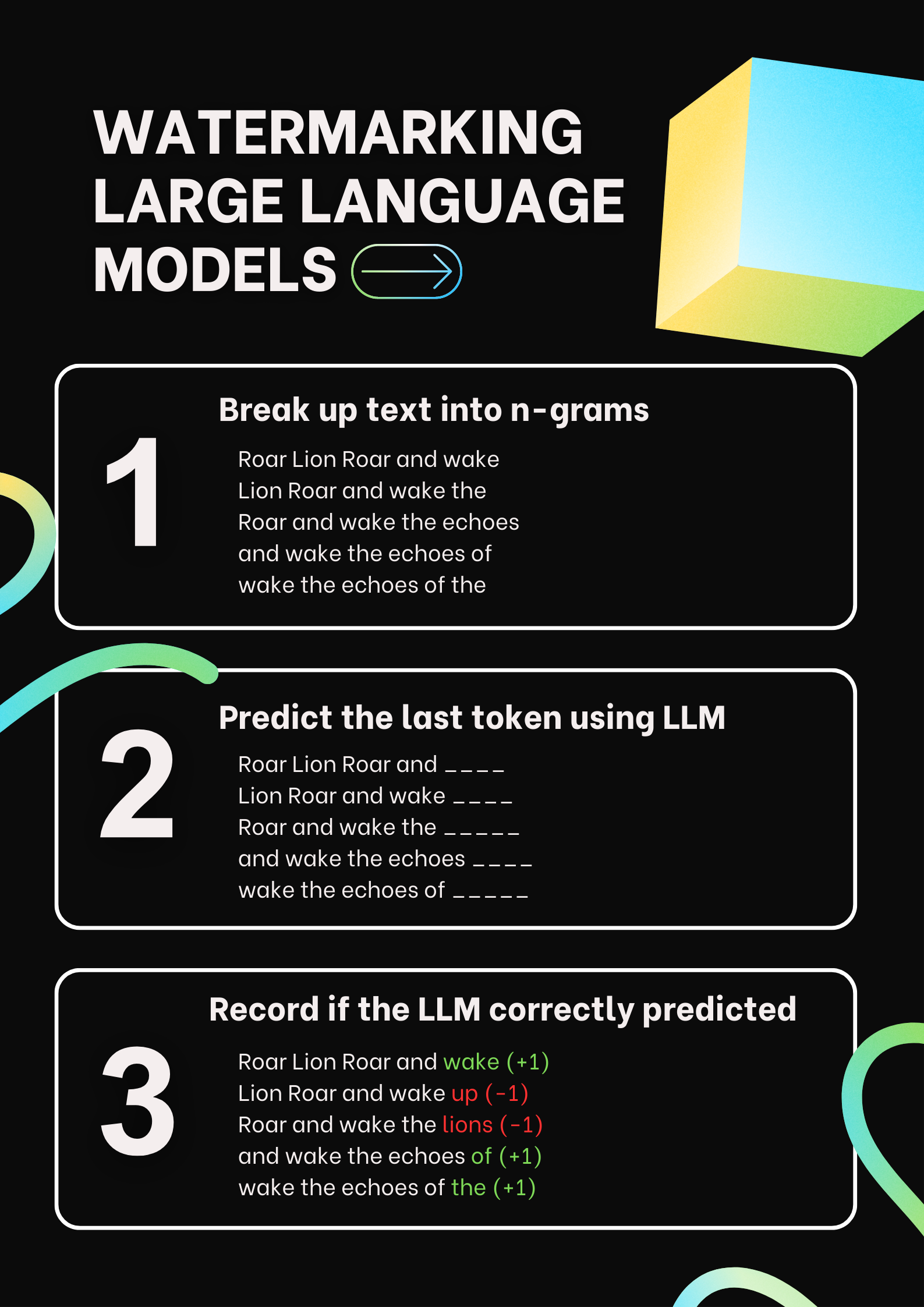

To detect this “watermark”, we utilize this principle by splitting up each text body into a series of n-grams of length 5 (Lancaster 7). For instance, the sentence “Roar lion roar, and wake the echos of the Hudson valley” would become [“Roar lion roar, and wake”, “lion roar, and wake the “, “roar, and wake the echos, … etc]. The detection agent would feed each n-gram into the LLM, asking it to predict the fifth word given the previous four words. The verifier records if the LLM correctly predicts the fifth word, eventually using this final tally to calculate the likelihood that a text was machine-generated. Without modifying a significant portion of the tokens, this watermark cannot be removed and allows us to detect machine-generated text (Kirchenbauer et al. 3).

However, this watermark is difficult to detect on low-entropy collections of tokens: words that present few options for the next word in a phrase. (Kirchenbauer et al. 2). For example, “Columbia” would be considered a low-entropy token since there is little variety in the next token used. More often than not, it would be “University”. Additionally, this technique can be easily defeated through simple paraphrase or more complicated tactics of lexical rearrangement within the LLM command input (Kirchenbauer et al. 10). For instance, by instructing the model to alter the tokens – such as by swapping vowels – the prediction options narrow, forcing the model to select the low-entropy token rather than a seeded option (Kirchenbauer et al. 10).

Despite these challenges, it is essential to continue exploring methods for preserving the authenticity of LLM outputs to maintain trust and reliability in AI-generated content for years to come.

Image of sentence being broken up into n-grams with probability of each next token option in the series

Image of sentence being broken up into n-grams with probability of each next token option in the series

References

Amrit, P., & Singh, A. K. (2022). Survey on watermarking methods in the artificial intelligence domain and beyond. Computer Communications, 188, 52–65. https://doi.org/10.1016/j.comcom.2022.02.023

Collins, K. (2023, February 17). How chatgpt could embed a ‘watermark’ in the text it generates. The New York Times. Retrieved August 2, 2023, from https://www.nytimes.com/interactive/2023/02/17/business/ai-text-detection.html.

Kirchenbauer, J., Geiping, J., Wen, Y., Katz, J., Miers, I., & Goldstein, T. (2023). A watermark for large language models. arXiv preprint arXiv:2301.10226.

Lancaster, T. (2023). Artificial Intelligence, text generation tools and chatgpt – does Digital Watermarking offer a solution? International Journal for Educational Integrity, 19(1). https://doi.org/10.1007/s40979-023-00131-6

Regazzoni, F., Palmieri, P., Smailbegovic, F., Cammarota, R., & Polian, I. (2021). Protecting artificial intelligence IPS: A survey of watermarking and fingerprinting for Machine Learning. CAAI Transactions on Intelligence Technology, 6(2), 180–191. https://doi.org/10.1049/cit2.12029