7:00 AM- Wake up

7:10 AM- Take a shower

7:25 AM- Brush teeth and hair

7:30 AM- Get dressed

7:40 AM- Eat breakfast

8:00 AM- Leave for work

For many, this is a typical morning schedule. However, many elderly and disabled individuals can’t navigate this routine without assistance. Recent research has presented a solution to assist with dressing, potentially making these individuals’ daily routines easier. What is this solution, you may ask? Robots!

Dressing is the daily activity that contributes most to the burden of caregivers (Dudgeon et al., 2008). Yet, it’s also the daily activity that reportedly uses the least amount of assistive technology (Dudgeon et al., 2008). So why hasn’t robot-assistance stepped in earlier? Even though robots have been able to grasp rigid household objects such as mugs and toothbrushes for over a decade (Saxena et al., 2008), robots have struggled with manipulating deformable objects such as fabric. With an infinite number of possible positions for a piece of fabric to rest in, teaching a robot how and where to pick it up has been a complex task.

To solve this problem, scientists have developed methods of computer vision that allow a robot to detect and measure clothing’s physical properties, including its wrinkles, edges, and borders (Jiménez et al., 2020). The most popular method is a 2D image processing procedure using Gabor filters. Gabor filters measure the frequencies present within a visual field (Baeldung, 2023). Notably, wrinkles exhibit lower frequencies compared to edges and borders (Jiménez et al., 2020), allowing Gabor filters to effectively differentiate between these features. Moreover, robots can employ image processing to identify suitable grasping points for specific tasks. For instance, when unfolding a garment for pre-dressing, a robot can identify and grasp each shoulder of a top using a similar 2D processing approach as mentioned earlier (Doumanoglou et al., 2014). Thanks to a range of innovative image processing techniques, robots are now capable of learning the necessary steps to manipulate items of clothing.

Dressing is a complex skill that requires data-driven learning approaches, such as imitation learning and reinforcement learning (Yin et al., 2021). In imitation learning, a robot is provided with a set of behavioral samples, which it then uses to learn a policy that mimics this behavior in similar situations (Garg, 2022). For example, a robot may be presented with a video of a human driving a car. After analyzing this video, the robot mimics the human’s behavior, allowing it to learn how to drive not just cars, but also trucks and golf carts. On the other hand, reinforcement learning uses a system of trial-and-error, in which the robot is “rewarded” for desired behavior and “punished” for undesired behavior using computer signals (Carew, 2023). Both of these machine-learning algorithms have recently been applied to fabric manipulation. For example, imitation learning has been effective in teaching a robot how to flatten, fold, and twist a cloth (Jia et al., 2019), and reinforcement learning has taught a robot to fold a t-shirt (Tsurumine et al., 2019).

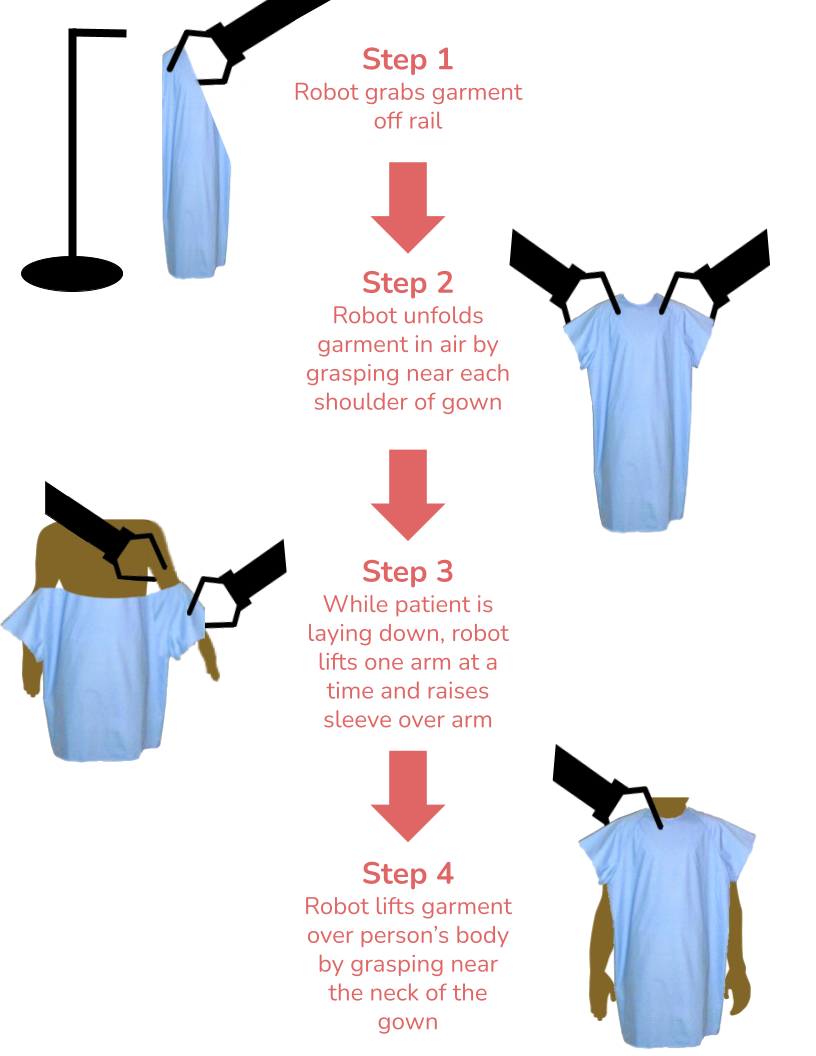

With developments in fabric perception and manipulation, robots have been able to apply such skills to dressing a human model. Recently, Fan Zhang and Yiannis Demiris have developed a robust dressing model targeting individuals who have completely lost upper-limb mobility (Zhang et al., 2022). To learn the fabric’s physical properties, their robot was presented with simulated and real-life demonstrations. Additionally, the robot used an image processing procedure to determine how and where to grasp the garment. Reinforcement learning was applied to fine-tune the robot’s procedure, rewarding it when it successfully grasped the garment in the correct location. As a result, their robot could follow a specific process of grasping and lifting to place a hospital gown on a medical training manikin. The figure below displays their 4-step dressing pipeline in more detail. Their robot additionally addressed safety issues by operating at a moderate speed and adhering to a maximum grip force when in contact with the manikin. Their robot performed this entire pipeline without malfunctions with a 90.5% success rate across 200 trials.

Zhang and Demiris’s model paves the way for further advancements in robot-assisted dressing. Future research could heighten robots’ procedural accuracy, enhance the safety of human-robot interaction, and widen robots’ dressing abilities to include tasks such as putting on pants or buttoning a shirt. One step at a time, robots are making life easier for those who struggle with disabilities. Cheers to robots changing the world for the better!

References

Baeldung. (2023, June 19). How to use Gabor filters to generate features for machine learning. Baeldung on Computer Science. https://www.baeldung.com/cs/ml-gabor-filters

Carew, J. M. (2023, February 10). What is reinforcement learning?: Definition from TechTarget. Enterprise AI. https://www.techtarget.com/searchenterpriseai/definition/reinforcement-learning

Doumanoglou, A., Kargakos, A., Kim, T.-K., & Malassiotis, S. (2014). Autonomous active recognition and unfolding of clothes using random decision forests and probabilistic planning. 2014 IEEE International Conference on Robotics and Automation (ICRA), 987–993. https://doi.org/10.1109/icra.2014.6906974

Dudgeon, B. J., Hoffman, J. M., Ciol, M. A., Shumway-Cook, A., Yorkston, K. M., & Chan, L. (2008). Managing activity difficulties at home: A survey of Medicare beneficiaries. Archives of Physical Medicine and Rehabilitation, 89(7), 1256–1261. https://doi.org/10.1016/j.apmr.2007.11.038

Garg, D. (2022, November 1). Learning to imitate. SAIL Blog. http://ai.stanford.edu/blog/learning-to-imitate/#:~:text=In%20imitation%20learning%20(IL)%2C,policy%20that%20mimics%20this%20behavior.

Jia, B., Pan, Z., Hu, Z., Pan, J., & Manocha, D. (2019). Cloth manipulation using random-forest-based imitation learning. IEEE Robotics and Automation Letters, 4(2), 2086–2093. https://doi.org/10.1109/lra.2019.2897370

Jiménez, P., & Torras, C. (2020). Perception of cloth in assistive robotic manipulation tasks. Natural Computing, 19(2), 409–431. https://doi.org/10.1007/s11047-020-09784-5

Saxena, A., Driemeyer, J., & Ng, A. Y. (2008). Robotic grasping of novel objects using vision. The International Journal of Robotics Research, 27(2), 157–173. https://doi.org/10.1177/0278364907087172

Tsurumine, Y., Cui, Y., Uchibe, E., & Matsubara, T. (2018). Deep reinforcement learning with smooth policy update: Application to robotic cloth manipulation. Robotics and Autonomous Systems, 112, 72–83. https://doi.org/10.1016/j.robot.2018.11.004

Yin, H., Varava, A., & Kragic, D. (2021). Modeling, learning, perception, and control methods for deformable object manipulation. Science Robotics, 6(54). https://doi.org/10.1126/scirobotics.abd8803

Zhang, F., & Demiris, Y. (2022). Learning garment manipulation policies toward robot-assisted dressing. Science Robotics, 7(65). https://doi.org/10.1126/scirobotics.abm6010