Abstract

Pshal P’shaw investigates the sonic instability of speech—where phonetic dissonance, vocal fragmentation, and gestural sound challenge structured linguistic norms. Developed during my residency at the Max Planck Institute for Empirical Aesthetics, this work explores how speech patterns morph in response to unfamiliar dialects, sonic environments, and computational intervention. Inspired by Hermann Finsterlin’s fluid, dimensionally unbound architectural forms, I approach language as an unfixed structure, revealing phonetic articulation's unstable, adaptive nature.

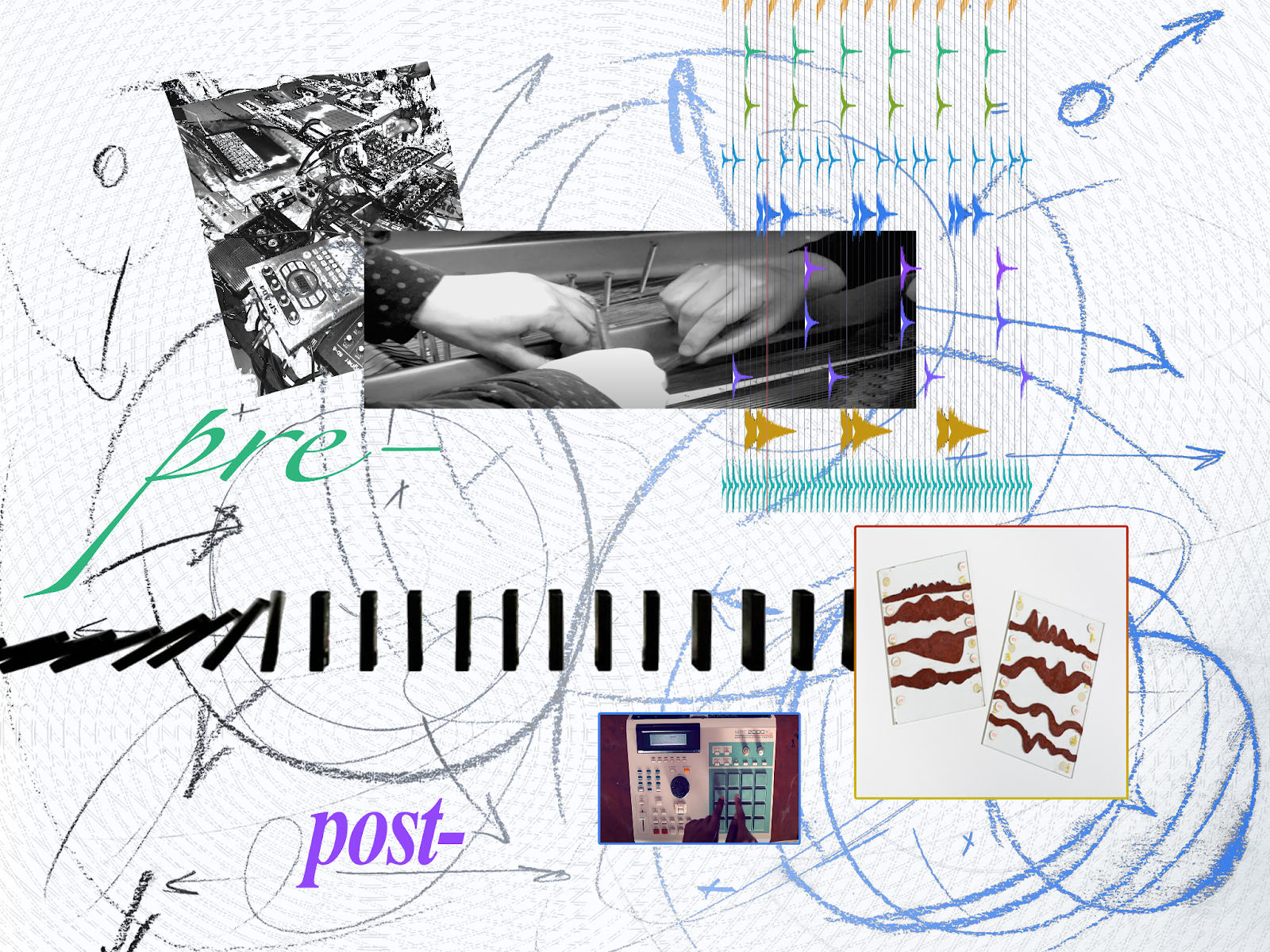

At its core, Pshal P’shaw is a multi-channel sculptural sound installation, neural instrument, and experimental phonetic composition that deconstructs oral adaptation and linguistic convergence. The project is based on 28 recorded voices engaging with a script designed to test the phonetic contours of a contemporary US Western dialect. Participants articulated words in controlled EEG lab settings, allowing for an analysis of phonetic deviation, mimicry, and emergent vocal patterns.

I collaborated with Cornelius Abel, the Head of the EEG laboratory at the Max Planck Institute for Empirical Aesthetics, to create a participant-based study. This study focuses on neural responses to phonetic adaptation and linguistic instability. It examines how the brain processes disrupted phonetics, prosodic shifts, and unfamiliar articulatory patterns, providing insights into cognitive processing beyond structured linguistic norms.

By positioning phonetic instability as an expressive and cognitive site, this work aligns with my broader research on interstitial sonic spaces—the liminal areas between signal and noise, order and disruption, articulation and abstraction. The project extends into machine learning and human-machine interaction, where a neural algorithm dissects and reorganizes speech based on phonetic similarity and difference. The system works within a granular information base of over 3,000 phonetic variations, revealing the rhythmic, percussive, and spectral structures embedded in vocal articulation.

Working with designer and Max/MSP specialist Matthew Ostrowski, I developed a custom Max/MSP script to explore how an AI-driven phonetic instrument can exist in a wandering state rather than optimization. Through sessions envisioning a system that resists linear progression, we designed a model that moves fluidly between pattern recognition and deviation, continually reorienting itself within the dataset rather than seeking a fixed resolution. This keeps the AI suspended in a process of recombination, allowing for unexpected phonetic proximities, spectral collisions, and recursive vocal transformations.

The work employs Flucoma and MUBI as Max/MSP patches for phonetic and vocal analysis, mapping articulatory gestures through spectral decomposition, transient detection, and dynamic filtering. The script was developed in MATLAB by the EEG labs, enabling phonetic feature extraction and computational manipulation. A 12-channel sculptural sound installation hosts the generative algorithm, continuously reshaping the recordings based on vocal proximity and divergence. Simultaneously, a 5-channel raster video system maps the frequency data of each voice onto facial deconstructions, amplifying the inherent instability of articulation.

A special edition record release with an accompanying booklet is available through Raster Media (Germany). This vinyl expands the project’s inquiries into a fixed yet fragmented format, extending the phonetic research into a sonic document. Side A, “Transients Script,” isolates the percussive elements of speech, revealing the microtonal instability of articulation as a rhythmic force. Side B examines spectral interplay across the 28 voices, exposing harmonic shifts, tonal variances, and dynamic resonances.

Pshal P’shaw interrogates how language operates beyond fixed linguistic hierarchies, where vocal adaptation and sonic fragmentation become sites of expanded meaning-making by engaging with phonetic mimicry, algorithmic processing, and embodied sound. In doing so, the work contributes to larger conversations in experimental phonetics, accessibility in assistive sound technologies, and the role of noise in cognitive and communicative systems. Through historical research (on linguistic shifts in 19th-century US boomtowns) and contemporary computational modeling, this project reimagines how phonetic structures evolve, break apart, and reform—not as errors but as integral components of human interaction and cognition.

Notes on Contributor

Victoria Keddie is an artist whose work explores sound beyond structured language, investigating how communication fractures and reconstitutes through error, interference, and technological mediation. She has been Co-Director of E.S.P. TV for over a decade, using televisual media for performance. Her work has been performed and exhibited internationally, with recent fellowships at the NYSCA/NYFA, the Max Planck Institute for Empirical Aesthetics, Elektronmusikstudion, and the Bemis Center for Contemporary Art. Keddie’s video works are distributed by Lightcone (FR) and The Filmmakers Co-op (US), and her sound works are released with Raster Media (DE), Chaikin Records (US), and Fridman Gallery (NYC/US).

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Copyright (c) 2025 Victoria Keddie